AI Search Builder

Crunchbase gives users access to a plethora of valuable data – financial, investment, and growth insights for over 3,000,000 companies, 280,000 investors, and more – but archaic filtering and search patterns make this data hard to find and sift through.

I incorporated AI and natural language processing to help users search in a streamlined, natural way.

Context

Crunchbase is a company data platform for salespeople, investors, and CEOs to find and close business.

My role

Product design

UX research

My Impact

4x increase in 6-month retention

11% increase in users saving searches

4x engagement

Collaborators

Product manager and tech lead

UX researcher

Filters of the past

Crunchbase contains a vast amount of useful data on companies, investors, and funding rounds, but users are required to sift through hundreds of filters to search for the data they need.

When they find what they're looking for, it is incredibly valuable – users that create a search with 3+ filters, and then save or export those results, are more likely to retain.

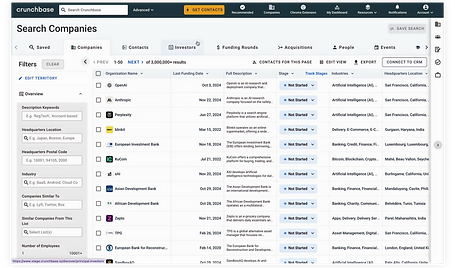

ORIGINAL UI

However, we were seeing slipping retention. For years, users told us about the difficulties they faced with Search: identifying which filters they would need, finding those filters, and updating them once applied. The entire experience was clunky and in desperate need of a refresh.

In a nutshell:

Users know their data need, but they don't know how to translate it into filters

Finding and refining selected filters is difficult because of cluttered UI

Without the right filters selected, users don't find or act on useful results

(and eventually, abandon Crunchbase)

This is where I come in: our data team had been developing an AI-filter builder, and my scrum team and I were tasked with incorporating the technology into Advanced Search.

Building context

My first step was understanding our existing technology, human behavior, and their implications on our new solution.

AI filter builder

Questions in plain English are translated into filters, though it has ~75% accuracy.

Human Behavior

People refine their questions step by step until the answer is clear.

This led my PM and me to our initial hypothesis, which shaped the first round of designs: users would naturally gravitate toward either the AI-powered filter builder or the manual filters, choose their preferred path, and continue using that consistently.

Iteration 1: Designing for the split

To test our hypothesis, I designed a clear split between AI and manual filters, but with an emphasis on the AI filter builder. Users could engage with AI or pick manual filters, and to support them refining their search, selected filters appeared just above the results.

I partnered with our front-end lead and researcher to build and test the design. Feedback was mixed: some participants found the search process faster and easier with AI, while others struggled with prompt writing and questioned the AI’s reliability.

Overall, users welcomed the addition of AI into the process, but needed more guidance and clarity between the two paths, which led to our next iteration.

Iteration 2: Building confidence

There were two clear themes from our research that I implemented into V2 of the search layout:

Build confidence by example

Iteration 2 put examples of AI queries front and centers so users would feel confident writing their first searches.

Relate AI and filters

I introduced the AI input and filters in the same module to re-iterate how AI queries led to filters being selected.

With confidence in our new direction, my PM, eng lead and I worked closely to develop a beta program to test the design for 6 weeks.

Beta test success

At the end of the program, I scheduled calls with 14 beta participants for my PM and I. After using the design for 6 weeks, our participants imparted some incredibly insightful feedback.

FINDING 1

Users that aren't comfortable with filters generally loved AI search

Power users still felt like it was more efficient for them to make manual searches, and at times didn't trust our AI, but they all preferred the beta over the original.

FINDING 2

Users really, really want us to improve the experience around engaging with results.

Through these user calls, my PM and I formalized a user journey that helped us ideate beyond query creation.

The user journey that we teased out from our research calls influenced the rest of the design process for this work.

Find

"I want to effortlessly find a targeted group of companies based on specific criteria"

Verify

"I want to ensure the results are accurate and relevant to my desired criteria"

Analyze

"I want deeply understand trends, patterns, and insights from my search results"

EXPERIENCE NEEDS

Fast, accurate to mental model, discoverable

EXPERIENCE NEEDS

Configurable, scannable

EXPERIENCE NEEDS

Understandable, actionable

Iteration 3: Optmizing the flow

Informed about users' natural workflows, I used hierarchy to enforce the user journey that led our beta participants to success.

By moving the AI search input above the filters, I guided users through the optimal workflow:

1. Start their search with the AI filter builder (Find),

2. Review the results (Verify),

3. Making small tweaks to their search by manually removing or adding selected filters.

To enable better focus on results, I included an

auto-filter collapse when users scroll down to the table.

The design entered an A/B test for 6 more weeks, and then we evaluated our success.

Auto-collapse microinteraction

Redesign and launch!

After success in our A/B test, leadership greenlit our design for full release. With some visual redesign and plenty of follow-up tasks, AI Search launched on Crunchbase.

The key ingredients for my design process:

Crafting the user journey around evolving AI capabilities

Understanding the iterative ways people ask and refine questions

Balancing executive feedback with user needs

Countless Figma iterations, one beta test, 24 user interviews, and an a/b test later, AI search builder launched in February 2025. So far, we have seen

4x increase

in 6-month retention

45% decrease

median searches per user before saving

11% increase

in users saving searches

4x

paid users engaging with Saved Searches